Artificial Intelligence (AI) has become a household name, thanks to its ubiquitous applications and breakthroughs in fields such as conversational AI. From robotics to biology, computer vision to Natural Language Processing (NLP), advances in AI have enabled what was previously unimaginable. Computer architecture is no exception, given the increasing adoption of Machine Learning (ML) for designing intelligent cache, memory, or branch prediction systems. However, there are areas within computer architecture where AI could prove invaluable, yet challenged by existing paradigms. Such is the case with workload performance prediction which primarily relies on Amdahl’s law [1], typically formulated as a unidimensional scaling equation that impedes the applicability of AI. To address this cardinal problem, researchers at Intel Corporation, Dr. Chaitanya Poolla & Mr. Rahul Saxena, proposed an innovative multi-dimensional extension, enabling the power of AI to revolutionize workload performance prediction [2].

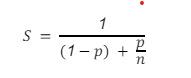

Conventionally, Amdahl’s law quantifies the efficacy of employing parallel resources in speeding up a program and was proposed by Gene Amdahl in 1967. The law is conceptualized by viewing a program as a combination of serial and parallel components. Simply put, if p represented the parallel fraction, the speedup (S) achieved by a machine with n times the resources is given by:

This equation represents Amdahl's law and provides key insights into the gains (S) achievable through parallelization efforts (n), while noting the fundamental limitation imposed by the non-parallelizable part (1-p). Further, the equation quantifies speedup when one factor or resource is scaled up while holding the others fixed. For example, scaling the number of cores at a fixed frequency results in one equation, and scaling the frequency at a fixed number of cores results in another. However, the net effect of scaling up the number of cores and frequency cannot be obtained by combining the equations together due to different underlying assumptions and missing interaction effects. Thus, the conventional formulation of Amdahl's law is simplistic for analyzing the performance of increasingly complex architectures of the AI era.

To mitigate these limitations, Intel’s researchers reformulated the law by considering multiple resources that could be scaled simultaneously. Dr. Poolla and Mr. Saxena's core approach centers on a multifactorial expression that captures both first and second-order resource effects prioritized using a hierarchical ordering principle. Importantly, they demonstrated that such an expression can be effectively reduced to a linear regression model through a series of transformations. Their formulation pioneers the integration of Amdahl's Law with machine learning, enabling intelligent, data-driven predictions of processor performance for the first time.

Similar to any good scientific theory, the Poolla-Saxena study examined their theoretical formulation with experimental validation to ensure real-world application. The study included four experiments spanning fifty eight tests across four hardware platforms and two industry-standard benchmarks. To assess generalizability, they designed each experiment to comprise 20 to 180 points, varying in core counts, core frequencies, cache sizes, and memory characteristics. The dataset was prepared by collecting performance measurements across the various designs. Through meticulous cross-validation procedures, the researchers found that the proposed multi-dimensional formulation of Amdahl's law resulted in prediction accuracies ranging from ~80% to 99%. These results underscore the potential of the extended Amdahl's Law in capturing complex interactions as relevant to performance prediction.

Dr. Poolla and Mr. Saxena’s extension offers significant improvement not just in performance prediction but also in aiding the design and optimization of multi-core processors. For example, through the derived analytical models, trade-offs between performance and cost can be better understood. The extended Amdahl’s law represents a pioneering advancement in performance analysis and can be used to reduce the reliance on resource-intensive simulations for developing performance models across architectures and benchmarks. That stated, the study also identifies limitations and offers pointers to improve upon. These include designing optimal experiments for accurate and efficient estimation of model parameters, analyzing performance reachability, and learning to identify better features for prediction.

As the AI revolution takes the center stage in today’s world, the computing technologies that power them become critical. The Poolla-Saxena study serves as a foundational stepping stone for assessing design and performance of computers. Thus, the study marks a paradigm shift in performance analysis by extending Amdahl's Law to create intelligent, multi-dimensional models that can be harnessed with machine learning techniques.

REFERENCES

[1] Amdahl, Gene M. "Validity of the single processor approach to achieving large scale computing capabilities." Proceedings of the April 18-20, 1967, spring joint computer conference. 1967.

[2] Chaitanya Poolla, Rahul Saxena. In extending Amdahl’s law to learn computer performance. Microprocessors and Microsystems 96 (2023) 104745.

For media inquiries, contact:

Chaitanya Poolla,

Software Research Scientist,

Intel Corporation.

Email: chaitanya.poolla@intel.com

Source: Story.KISSPR.com

Release ID: 1031889